The restlessness

I had a perfectly stable single-site Kubernetes cluster in the Netherlands. It worked, it was reliable, and by any reasonable measure, it was enough. But I have learned over the years that when things become too stable, too predictable, I start looking for the next challenge; this pattern has followed me throughout my career, moving on whenever circumstances headed toward a stale that was beyond my control to influence.

The initial justification was disaster recovery — what happens if the house burns down? All that work, gone. But if I am being honest with myself, DR was merely the acceptable excuse; the real motivation was the desire to understand how the internet actually works at the routing level, to own that knowledge rather than abstracting it away behind managed services.

Hence, the project scope expanded: not just a second site, but my own Autonomous System Number, my own IP space, actual BGP peering with upstream providers and an internet exchange. The kind of infrastructure I had only ever seen from the outside during my years in enterprise environments.

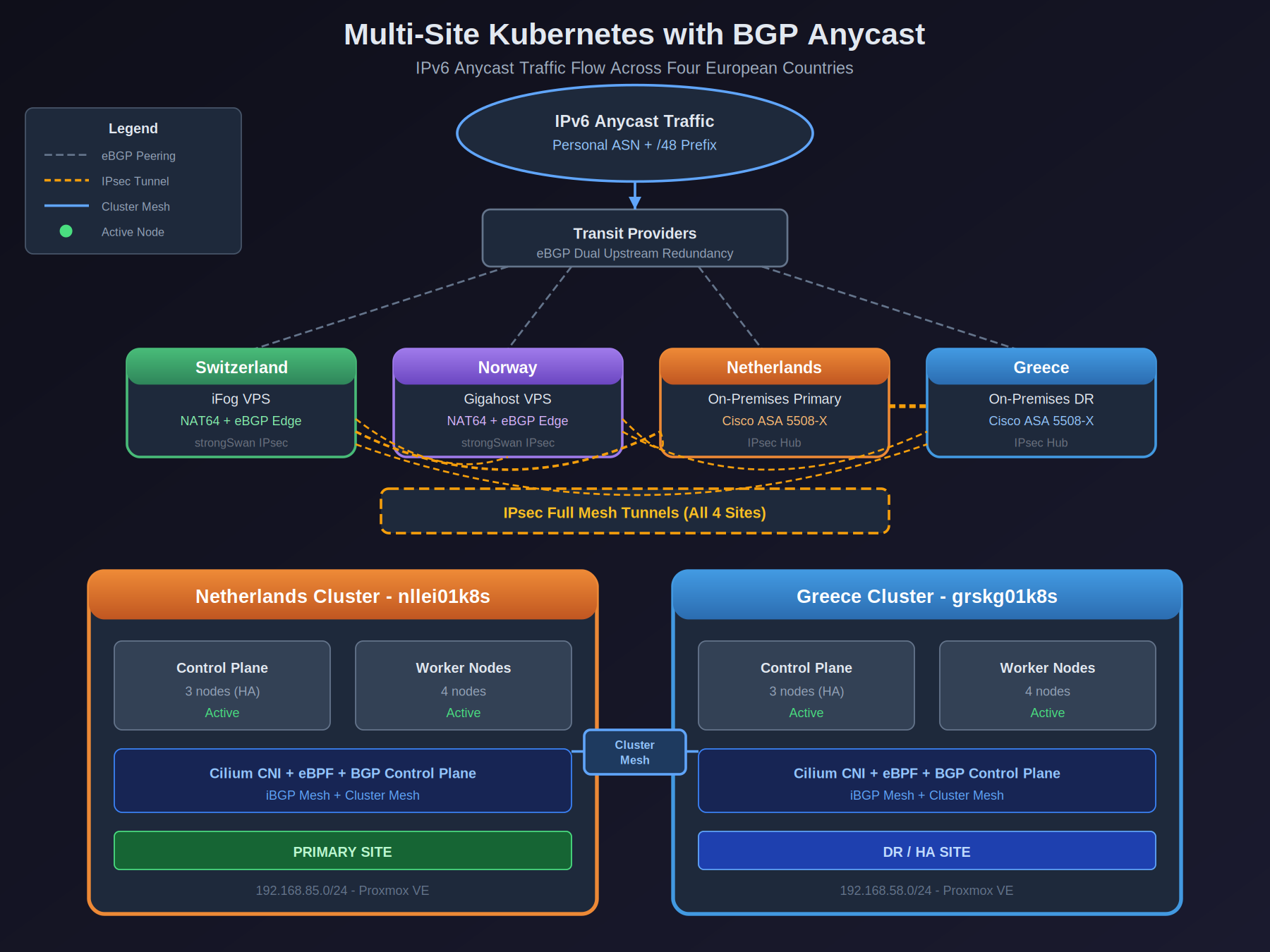

The architecture

AS214304 is my own Autonomous System Number, and 2a0c:9a40:8e20::/48 is the IPv6 prefix registered via RIPE NCC (sponsored by iFog GmbH, who have been excellent for hobbyist allocations).

The infrastructure spans 20 nodes across four European countries:

- Netherlands & Greece — on-premises clusters behind Cisco ASAs

- Norway & Switzerland — cloud edge nodes running strongSwan

Connecting it all: 39 FRR BGP sessions, 4 Cilium BGP sessions, and 12 IPsec tunnels forming a full mesh between sites. The encrypted tunnel topology links Cisco ASA on the on-prem side with strongSwan on the cloud edge nodes.

Traffic flow: IPv6 anycast reaches whichever edge node is closest, then traverses the IPsec mesh to the Kubernetes clusters running Cilium with native BGP control plane for dynamic pod routing. NAT64 at the edge enables IPv6 ingress to the IPv4 core, while dual-stack anycast with SNI-based routing handles IPv4 traffic on shared IPs.

BGP peering

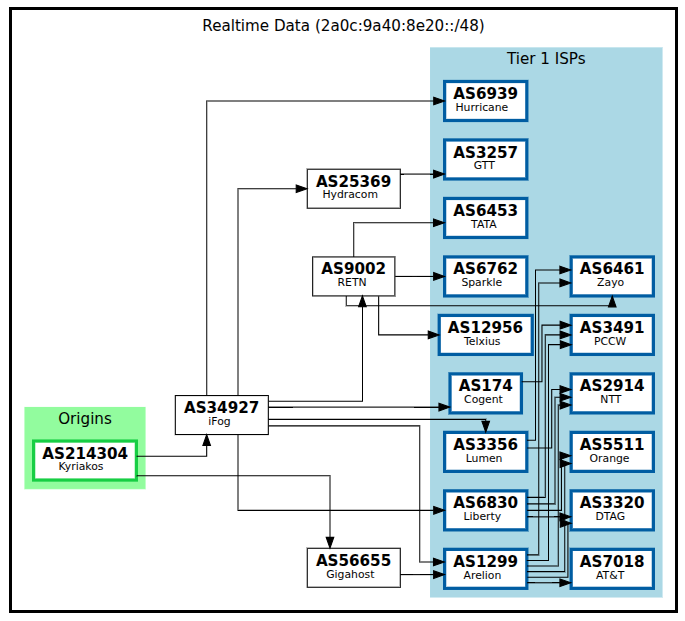

Dual transit provides upstream connectivity: Terrahost AS56655 in Norway and iFog AS34927 in Switzerland. Beyond transit, I peer with the FogIXP route servers (AS47498), which provides direct access to over 3,500 peer prefixes — traffic to those networks bypasses transit entirely.

Across three independent upstream paths, the setup receives the full IPv6 routing table: 234,000+ prefixes. RPKI/ROA validation ensures route origin authenticity, with BGPalerter providing real-time monitoring for hijacks or leaks.

Getting the first session established was an experience in patience and attention to detail. Many hours staring at show bgp summary wondering why the state remained stubbornly “Active” rather than “Established.” The cause, eventually, was a typo in the peer AS number — the kind of mistake that feels embarrassing in retrospect but is apparently universal among anyone who has configured BGP for the first time.

There is a particular satisfaction in watching my /48 prefix propagate through the global routing table, seeing AS6939, AS174, AS3356 appear in the path — Hurricane Electric, Cogent, Lumen, the Tier 1 carriers that form the backbone of the internet, now routing traffic to infrastructure running partly in my apartment. This is what I wanted to understand, and now I do.

My prefix reaching Tier 1 ISPs through iFog and Terrahost — Hurricane Electric, Cogent, Lumen, and friends actually routing my homelab traffic.

My prefix reaching Tier 1 ISPs through iFog and Terrahost — Hurricane Electric, Cogent, Lumen, and friends actually routing my homelab traffic.

The moment it connected

There is a moment, after weeks of debugging individual components in isolation, when you look at the system as a whole and realize it is actually working. Dozens of BGP sessions exchanging routes simultaneously across the iBGP mesh over IPsec: edge nodes announcing my prefix to upstream providers and the IXP, receiving the full internet routing table back, propagating it inward to the on-prem clusters where Cilium advertises pod and service IPs to the network.

All of this happening across encrypted tunnels spanning four countries.

I may have said something unprofessional out loud.

Regarding Cilium

The migration from Flannel to Cilium was, according to everyone I consulted, supposed to be risky. Downtime was expected. Connectivity loss was anticipated. I followed the documentation carefully, proceeded node by node, and… nothing broke. The same with ClusterMesh — enabled, configured, and the clusters discovered each other without incident.

Perhaps I was fortunate. Perhaps the documentation is simply excellent. Either way, I have no dramatic war stories to share here, which is honestly the best possible outcome.

Monitoring and automation

Infrastructure at this scale requires visibility. Grafana dashboards with Thanos handle metrics federation across sites, LibreNMS monitors the network devices, and Oxidized maintains configuration backups for the routers and firewalls. GitLab CI/CD pipelines automate infrastructure deployment — changes go through merge requests, not SSH sessions.

What I would approach differently

I attempted to learn Kubernetes, GitOps, and BGP simultaneously, while also completing Coursera courses for CKA preparation and Cisco NetDevOps certifications, while also building what amounts to production infrastructure. This approach is not one I would recommend.

The context switching was relentless — debugging a Cilium network policy with three browser tabs open about BGP path selection and another about Terraform state management. I followed my impulses and moved fast into domains where I had limited foundation, and while the results eventually worked, the process was more chaotic than it needed to be.

The plan now is to slow down and approach the fundamentals more deliberately. Kubestronaut (all five Kubernetes certifications) is the goal, but properly this time, with the discipline I should have exercised from the beginning.

Future direction

The current architecture is complete and stable, but there is always room for refinement. Consolidating more BGP responsibility into Cilium’s control plane might simplify the topology. Additional IXP peerings would improve path diversity further.

And documentation. I should write documentation before I forget how all of this actually works.

Resources

For those interested in attempting something similar:

- RIPE NCC - Autonomous System Numbers

- FRRouting Documentation

- Cilium BGP Control Plane

- iFog GmbH — excellent sponsor for RIPE resources, supportive of hobbyist allocations

Questions or comments? You can find me on LinkedIn or review sanitized configurations on GitHub.